CovOps

Location : Ether-Sphere Location : Ether-Sphere

Job/hobbies : Irrationality Exterminator

Humor : Über Serious

|  Subject: The Third Revolution in Warfare Subject: The Third Revolution in Warfare  Thu Sep 16, 2021 8:11 pm Thu Sep 16, 2021 8:11 pm | |

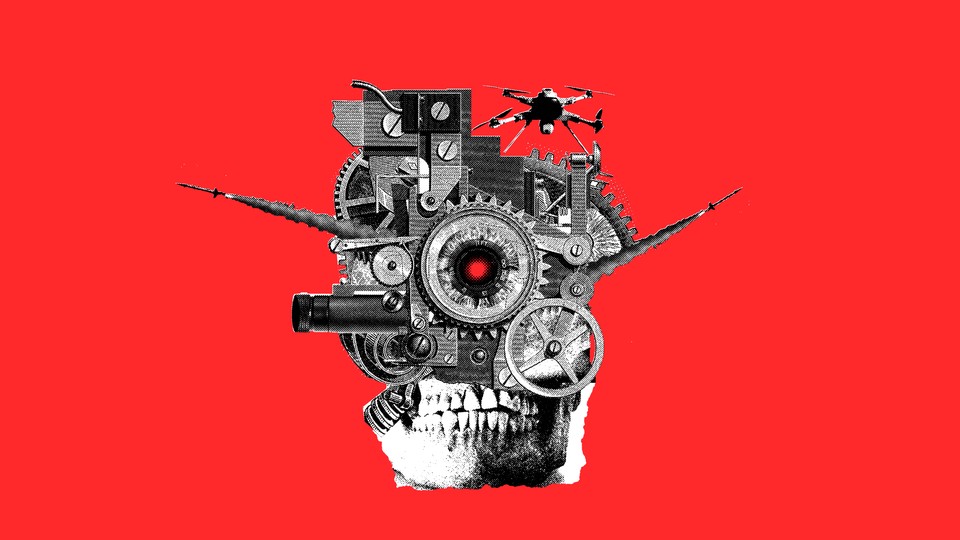

| First there was gunpowder. Then nuclear weapons. Next: artificially intelligent weapons.

On the 20th anniversary of 9/11, against the backdrop of the rushed U.S.-allied Afghanistan withdrawal, the grisly reality of armed combat and the challenge posed by asymmetric suicide terror attacks grow harder to ignore.

But weapons technology has changed substantially over the past two decades. And thinking ahead to the not-so-distant future, we must ask: What if these assailants were able to remove human suicide bombers or attackers from the equation altogether? As someone who has studied and worked in artificial intelligence for the better part of four decades, I worry about such a technology threat, born from artificial intelligence and robotics.

Autonomous weaponry is the third revolution in warfare, following gunpowder and nuclear arms. The evolution from land mines to guided missiles was just a prelude to true AI-enabled autonomy—the full engagement of killing: searching for, deciding to engage, and obliterating another human life, completely without human involvement.

An example of an autonomous weapon in use today is the Israeli Harpy drone, which is programmed to fly to a particular area, hunt for specific targets, and then destroy them using a high-explosive warhead nicknamed “Fire and Forget.” But a far more provocative example is illustrated in the dystopian short film Slaughterbots, which tells the story of bird-sized drones that can actively seek out a particular person and shoot a small amount of dynamite point-blank through that person’s skull. These drones fly themselves and are too small and nimble to be easily caught, stopped, or destroyed.

These “slaughterbots” are not merely the stuff of fiction. One such drone nearly killed the president of Venezuela in 2018, and could be built today by an experienced hobbyist for less than $1,000. All of the parts are available for purchase online, and all open-source technologies are available for download. This is an unintended consequence of AI and robotics becoming more accessible and inexpensive. Imagine, a $1,000 political assassin! And this is not a far-fetched danger for the future but a clear and present danger.

We have witnessed how quickly AI has advanced, and these advancements will accelerate the near-term future of autonomous weapons. Not only will these killer robots become more intelligent, more precise, faster, and cheaper; they will also learn new capabilities, such as how to form swarms with teamwork and redundancy, making their missions virtually unstoppable. A swarm of 10,000 drones that could wipe out half a city could theoretically cost as little as $10 million.

Even so, autonomous weapons are not without benefits. Autonomous weapons can save soldiers’ lives if wars are fought by machines. Also, in the hands of a responsible military, they can help soldiers target only combatants and avoid inadvertently killing friendly forces, children, and civilians (similar to how an autonomous vehicle can brake for the driver when a collision is imminent). Autonomous weapons can also be used defensively against assassins and perpetrators.

But the downsides and liabilities far outweigh these benefits. The strongest such liability is moral—nearly all ethical and religious systems view the taking of a human life as a contentious act requiring strong justification and scrutiny. United Nations Secretary-General António Guterres has stated, “The prospect of machines with the discretion and power to take human life is morally repugnant.”

Autonomous weapons lower the cost to the killer. Giving one’s life for a cause—as suicide bombers do—is still a high hurdle for anyone. But with autonomous assassins, no lives would have to be given up for killing. Another major issue is having a clear line of accountability—knowing who is responsible in case of an error. This is well established for soldiers on the battlefield. But when the killing is assigned to an autonomous-weapon system, the accountability is unclear (similar to accountability ambiguity when an autonomous vehicle runs over a pedestrian).

Such ambiguity may ultimately absolve aggressors for injustices or violations of international humanitarian law. And this lowers the threshold of war and makes it accessible to anyone. A further related danger is that autonomous weapons can target individuals, using facial or gait recognition, and the tracing of phone or IoT signals. This enables not only the assassination of one person but a genocide of any group of people. One of the stories in my new “scientific fiction” book based on realistic possible-future scenarios, AI 2041, which I co-wrote with the sci-fi writer Chen Qiufan, describes a Unabomber-like scenario in which a terrorist carries out the targeted killing of business elites and high-profile individuals.

Greater autonomy without a deep understanding of meta issues will further boost the speed of war (and thus casualties) and will potentially lead to disastrous escalations, including nuclear war. AI is limited by its lack of common sense and human ability to reason across domains. No matter how much you train an autonomous-weapon system, the limitation on domain will keep it from fully understanding the consequences of its actions.

In 2015, the Future of Life Institute published an open letter on AI weapons, warning that “a global arms race is virtually inevitable.” Such an escalatory dynamic represents familiar terrain, whether the Anglo-German naval-arms race or the Soviet-American nuclear-arms race. Powerful countries have long fought for military supremacy. Autonomous weapons offer many more ways to “win” (the smallest, fastest, stealthiest, most lethal, and so on).

Pursuing military might through autonomous weaponry could also cost less, lowering the barrier of entry to such global-scale conflicts. Smaller countries with powerful technologies, such as Israel, have already entered the race with some of the most advanced military robots, including some as small as flies. With the near certainty that one’s adversaries will build up autonomous weapons, ambitious countries will feel compelled to compete.

Where will this arms race take us? Stuart Russell, a computer-science professor at UC Berkeley, says, “The capabilities of autonomous weapons will be limited more by the laws of physics—for example, by constraints on range, speed, and payload—than by any deficiencies in the AI systems that control them. One can expect platforms deployed in the millions, the agility and lethality of which will leave humans utterly defenseless.” This multilateral arms race, if allowed to run its course, will eventually become a race toward oblivion.

Nuclear weapons are an existential threat, but they’ve been kept in check and have even helped reduce conventional warfare on account of the deterrence theory. Because a nuclear war leads to mutually assured destruction, any country initiating a nuclear first strike likely faces reciprocity and thus self-destruction.

But autonomous weapons are different. The deterrence theory does not apply, because a surprise first attack may be untraceable. As discussed earlier, autonomous-weapon attacks can quickly trigger a response, and escalations can be very fast, potentially leading to nuclear war. The first attack may not even be triggered by a country but by terrorists or other non-state actors. This exacerbates the level of danger of autonomous weapons.

There have been several proposed solutions for avoiding this existential disaster. One is the human-in-the-loop approach, or making sure that every lethal decision is made by a human. But the prowess of autonomous weapons largely comes from the speed and precision gained by not having a human in the loop. This debilitating concession may be unacceptable to any country that wants to win the arms race. Human inclusion is also hard to enforce and easy to avoid. And the protective quality of having a human involved depends very much on the moral character and judgment of that individual.

A second proposed solution is a ban, which has been supported by both the Campaign to Stop Killer Robots and a letter signed by 3,000 people, including Elon Musk, the late Stephen Hawking, and thousands of AI experts. Similar efforts have been undertaken in the past by biologists, chemists, and physicists against biological, chemical, and nuclear weapons. A ban will not be easy, but previous bans against blinding lasers and chemical and biological weapons appear to have been effective. The main roadblock today is that the United States, the United Kingdom, and Russia all oppose banning autonomous weapons, stating that it is too early to do so.

A third approach is to regulate autonomous weapons. This will likewise be complex because of the difficulty of constructing effective technical specifications without being too broad. What defines an autonomous weapon? How do you audit for violations? These are all extraordinarily difficult short-term obstacles. In the very long term, creative solutions might be possible, though they are difficult to imagine—for example, can countries agree that all future wars will be fought only with robots (or better yet, only in software), resulting in no human casualties but delivering the classic spoils of war? Or perhaps there’s a future in which wars are fought with humans and robots, but the robots are permitted to use only weapons that will disable robot combatants and are harmless to human soldiers.

Autonomous weapons are already a clear and present danger, and will become more intelligent, nimble, lethal, and accessible at an alarming speed. The deployment of autonomous weapons will be accelerated by an inevitable arms race that will lack the natural deterrence of nuclear weapons. Autonomous weapons are the AI application that most clearly and deeply conflicts with our morals and threatens humanity.

.https://www.theatlantic.com/technology/archive/2021/09/i-weapons-are-third-revolution-warfare/620013/

_________________

Anarcho-Capitalist, AnCaps Forum, Ancapolis, OZschwitz Contraband

“The state calls its own violence law, but that of the individual, crime.”-- Max Stirner

"Remember: Evil exists because good men don't kill the government officials committing it." -- Kurt Hofmann |

|